Over the past 3 or 4 years, my colleagues and I at Red Hat have been making a set of composable command line tools for handling virtual machine disk images. These let you copy, create, manipulate, display and modify disk images using simple tools that can be connected together in pipelines, while at the same time working very efficiently. It’s all based around the very efficient Network Block Device (NBD) protocol and NBD URI specification.

A basic and very old tool is qemu-img:

$ qemu-img create -f qcow2 disk.qcow2 1G

which creates an empty disk image in qcow2 format. Suppose you want to write into this image? We can compose a few programs:

$ touch disk.raw $ nbdfuse disk.raw [ qemu-nbd -f qcow2 disk.qcow2 ] &

This serves the qcow2 file up over NBD (qemu-nbd) and then exposes that as a local file using FUSE (nbdfuse). Of interest here, nbdfuse runs and manages qemu-nbd as a subprocess, cleaning it up when the FUSE file is unmounted. We can partition the file using regular tools:

$ gdisk disk.raw

Command (? for help): n

Partition number (1-128, default 1):

First sector (34-2097118, default = 2048) or {+-}size{KMGTP}:

Last sector (2048-2097118, default = 2097118) or {+-}size{KMGTP}:

Current type is 8300 (Linux filesystem)

Hex code or GUID (L to show codes, Enter = 8300):

Changed type of partition to 'Linux filesystem'

Command (? for help): p

Number Start (sector) End (sector) Size Code Name

1 2048 2097118 1023.0 MiB 8300 Linux filesystem

Command (? for help): w

Let’s fill that partition with some files using guestfish and unmount it:

$ guestfish -a disk.raw run : \ mkfs ext2 /dev/sda1 : mount /dev/sda1 / : \ copy-in ~/libnbd / $ fusermount3 -u disk.raw [1]+ Done nbdfuse disk.raw [ qemu-nbd -f qcow2 disk.qcow2 ]

Now the original qcow2 file is no longer empty but populated with a partition, a filesystem and some files. We can see the space used by examining it with virt-df:

$ virt-df -a disk.qcow2 -h Filesystem Size Used Available Use% disk.qcow2:/dev/sda1 1006M 52M 903M 6%

Now let’s see the first sector. You can’t just “cat” a qcow2 file because it’s a complicated format understood only by qemu. I can assemble qemu-nbd, nbdcopy and hexdump into a pipeline, where qemu-nbd converts the qcow2 format to raw blocks, and nbdcopy copies those out to a pipe:

$ nbdcopy -- [ qemu-nbd -r -f qcow2 disk.qcow2 ] - | \ hexdump -C -n 512 00000000 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 000001c0 02 00 ee 8a 08 82 01 00 00 00 ff ff 1f 00 00 00 |................| 000001d0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 000001f0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 55 aa |..............U.| 00000200

How about instead of a local file, we start with a disk image hosted on a web server, and compressed? We can do that too. Let’s start by querying the size by composing nbdkit’s curl plugin, xz filter and nbdinfo. nbdkit’s --run option composes nbdkit with an external program, connecting them together over an NBD URI ($uri).

$ web=http://mirror.bytemark.co.uk/fedora/linux/development/rawhide/Cloud/x86_64/images/Fedora-Cloud-Base-Rawhide-20220127.n.0.x86_64.raw.xz $ nbdkit curl --filter=xz $web --run 'nbdinfo $uri' protocol: newstyle-fixed without TLS export="": export-size: 5368709120 (5G) content: DOS/MBR boot sector, extended partition table (last) uri: nbd://localhost:10809/ ...

Notice it prints the uncompressed (raw) size. Fedora already provides a qcow2 equivalent, but we can also make our own by composing nbdkit, curl, xz, nbdcopy and qemu-nbd:

$ qemu-img create -f qcow2 cloud.qcow2 5368709120 -o preallocation=metadata

$ nbdkit curl --filter=xz $web \

--run 'nbdcopy -p -- $uri [ qemu-nbd -f qcow2 cloud.qcow2 ]'

Why would you do that instead of downloading and uncompressing? In this case it wouldn’t matter much, but in the general case the disk image might be enormous (terabytes) and you don’t have enough local disk space to do it. Assembling tools into pipelines means you don’t need to keep an intermediate local copy at any point.

We can find out what we’ve got in our new image using various tools:

$ qemu-img info cloud.qcow2

image: cloud.qcow2

file format: qcow2

virtual size: 5 GiB (5368709120 bytes)

disk size: 951 MiB

$ virt-df -a cloud.qcow2 -h

Filesystem Size Used Available Use%

cloud.qcow2:/dev/sda2 458M 50M 379M 12%

cloud.qcow2:/dev/sda3 100M 9.8M 90M 10%

cloud.qcow2:/dev/sda5 4.4G 311M 3.6G 7%

cloud.qcow2:btrfsvol:/dev/sda5/root

4.4G 311M 3.6G 7%

cloud.qcow2:btrfsvol:/dev/sda5/home

4.4G 311M 3.6G 7%

cloud.qcow2:btrfsvol:/dev/sda5/root/var/lib/portables

4.4G 311M 3.6G 7%

$ virt-cat -a cloud.qcow2 /etc/redhat-release

Fedora release 36 (Rawhide)

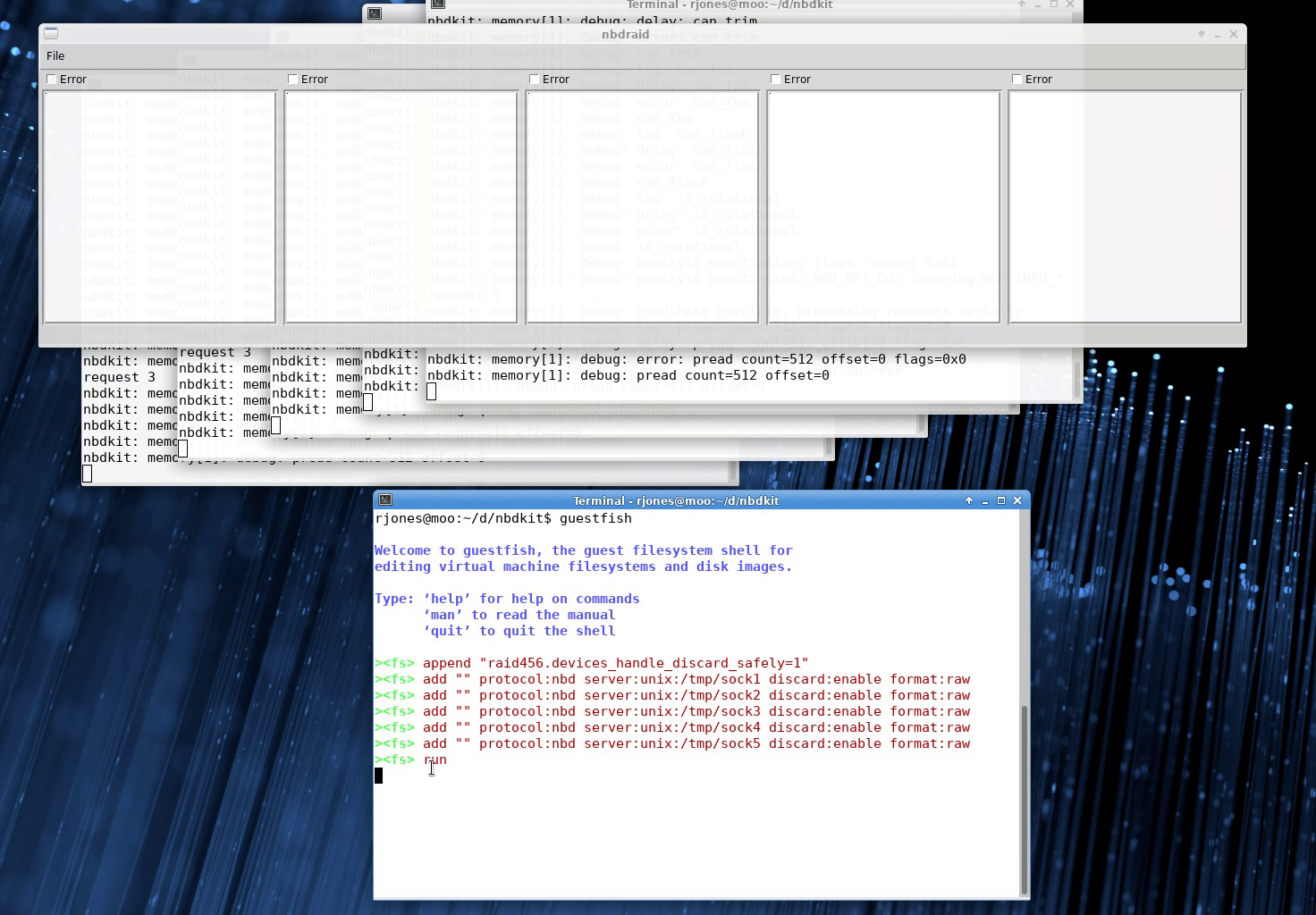

If we wanted to play with the guest in a sandbox, we could stand up an in-memory NBD server populated with the cloud image and connect it to qemu using standard NBD URIs:

$ nbdkit memory 10G

$ qemu-img convert cloud.qcow2 nbd://localhost

$ virt-customize --format=raw -a nbd://localhost \

--root-password password:123456

$ qemu-system-x86_64 -machine accel=kvm \

-cpu host -m 2048 -serial stdio \

-drive file=nbd://localhost,if=virtio

...

fedora login: root

Password: 123456

# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sr0 11:0 1 1024M 0 rom

zram0 251:0 0 1.9G 0 disk [SWAP]

vda 252:0 0 10G 0 disk

├─vda1 252:1 0 1M 0 part

├─vda2 252:2 0 500M 0 part /boot

├─vda3 252:3 0 100M 0 part /boot/efi

├─vda4 252:4 0 4M 0 part

└─vda5 252:5 0 4.4G 0 part /home

/

We can even find out what changed between the in-memory copy and the pristine qcow2 version (quite a lot as it happens):

$ virt-diff --format=raw -a nbd://localhost --format=qcow2 -A cloud.qcow2 - d 0755 2518 /etc + d 0755 2502 /etc # changed: st_size - - 0644 208 /etc/.updated - d 0750 108 /etc/audit + d 0750 86 /etc/audit # changed: st_size - - 0640 84 /etc/audit/audit.rules - d 0755 36 /etc/issue.d + d 0755 0 /etc/issue.d # changed: st_size ... for several pages ...

In conclusion, we’ve got a couple of ways to serve disk content over NBD, a set of composable tools for copying, creating, displaying and modifying disk content either from local files or over NBD, and a way to pipe disk data between processes and systems.

We use this in virt-v2v which can suck VMs out of VMware to KVM systems, efficiently, in parallel, and without using local disk space for even the largest guest.